Published: Dec 04, 2025

AI gateways: The guardians of LLM usage and security

Organisations are embracing large language models at speed, but this acceleration raises heightened concerns about safety, governance, and cost control. As employees gain access to an ever-growing number of commercial and open-source tools, often from unmanaged devices, the ability to regulate and secure AI use has become increasingly complex.

In this environment, AI gateways have emerged as a critical safeguard. A secure control layer that sits between users and large language models, an AI gateway monitors, filters and manages every AI request to ensure safe, compliant and efficient use of AI across the organisation. Acting as a central control point, it helps organisations enforce policies, prevent misuse, optimise costs, and ensure the responsible use of LLMs. This article explores their role, the challenges they address and the considerations organisations must weigh when deploying them.

Key takeaways

- The rapid rise of LLM usage has created a widening gap between organisational ambition and the ability to secure, govern and control how AI is used.

- AI gateways offer a central way to monitor, regulate and protect AI interactions, helping organisations manage security risks, enforce policies, and optimise costs.

- The article explains what AI gateways do, the challenges they introduce, and the key considerations for deploying them effectively in the enterprise.

A closer look at the role and impact of AI gateways

As organisations adopt Generative AI (GenAI) across more business functions—from coding and operations to marketing and customer service—they face a growing tension between speed and safety. Leaders want rapid progress, yet employees remain cautious, consistently highlighting concerns such as cybersecurity risks, inaccurate outputs and potential privacy violations. At the same time, the ease of accessing commercial or open-source LLMs from unmanaged devices makes it increasingly difficult for organisations to maintain oversight and control of AI usage in practice.

The need for stronger AI control

The expansion of GenAI has introduced risks such as data leakage, biased outputs, hallucinations and misuse. With limited ways to prevent unsanctioned access, organisations increasingly need mechanisms to monitor, secure and govern AI interactions. Blocking unauthorised AI tools and enforcing usage policies have become essential first steps.

What does an AI gateway do?

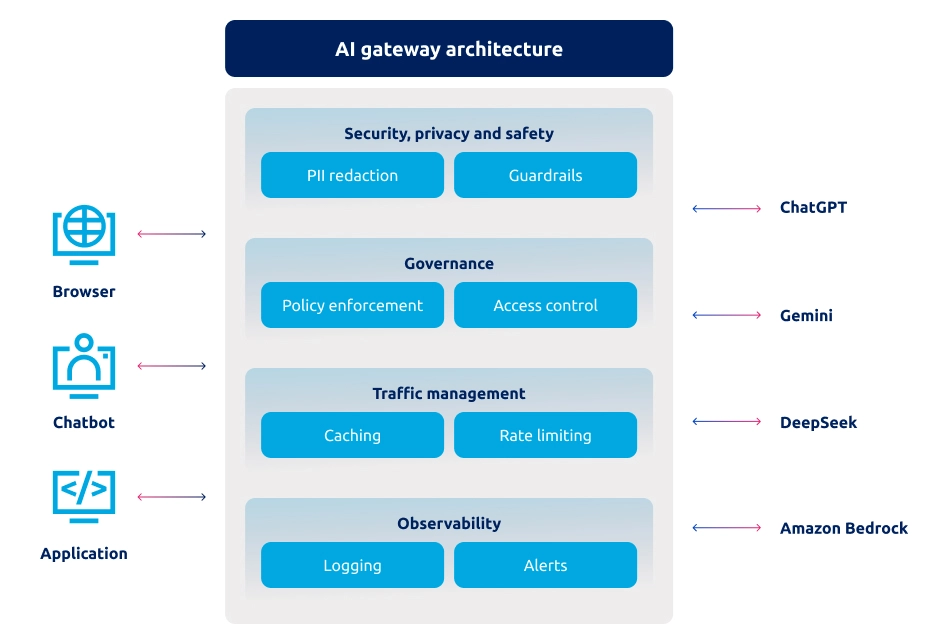

An AI gateway acts as a secure, centralised access point for all LLM traffic inside an enterprise. It consolidates multiple model providers behind a single interface, making it easier for teams to build applications without managing complex integrations.

Figure 1: General architecture of an AI gateway.

Beyond simplifying access, the gateway enforces critical governance functions. It applies authentication and authorisation controls, supports SSO and RBAC, and ensures AI interactions comply with security and regulatory requirements such as PII protection. Traffic management components reduce latency, while logging and monitoring tools provide visibility into usage patterns and potential risks. This architecture enables enterprises to scale adoption while maintaining consistency, security and performance.

Key benefits for the enterprise

AI gateways deliver four major advantages:

- Enhanced security and privacy by filtering sensitive data and preventing leakage.

- Custom policy enforcement that allows organisations to define how LLMs should behave across different scenarios.

- Cost optimisation by managing unnecessary or redundant API usage.

- Auditability and compliance through complete logs that support investigations, reviews and governance activities.

Challenges in implementation

Despite their value, AI gateways introduce several technical and operational challenges. Designing effective policies is complex, and misconfigurations can impact productivity or weaken controls. False positives and false negatives remain a major issue, affecting the accuracy of content filtering and security enforcement. Performance overhead is another concern, as introducing a gateway can add latency to AI interactions.

Security teams must also contend with evolving threats. Adversarial prompts, such as DAN-style jailbreak attempts, are becoming more sophisticated, requiring gateways to be updated continuously. Striking the right balance between protection and usability is crucial.

Insights from evaluating a commercial AI gateway

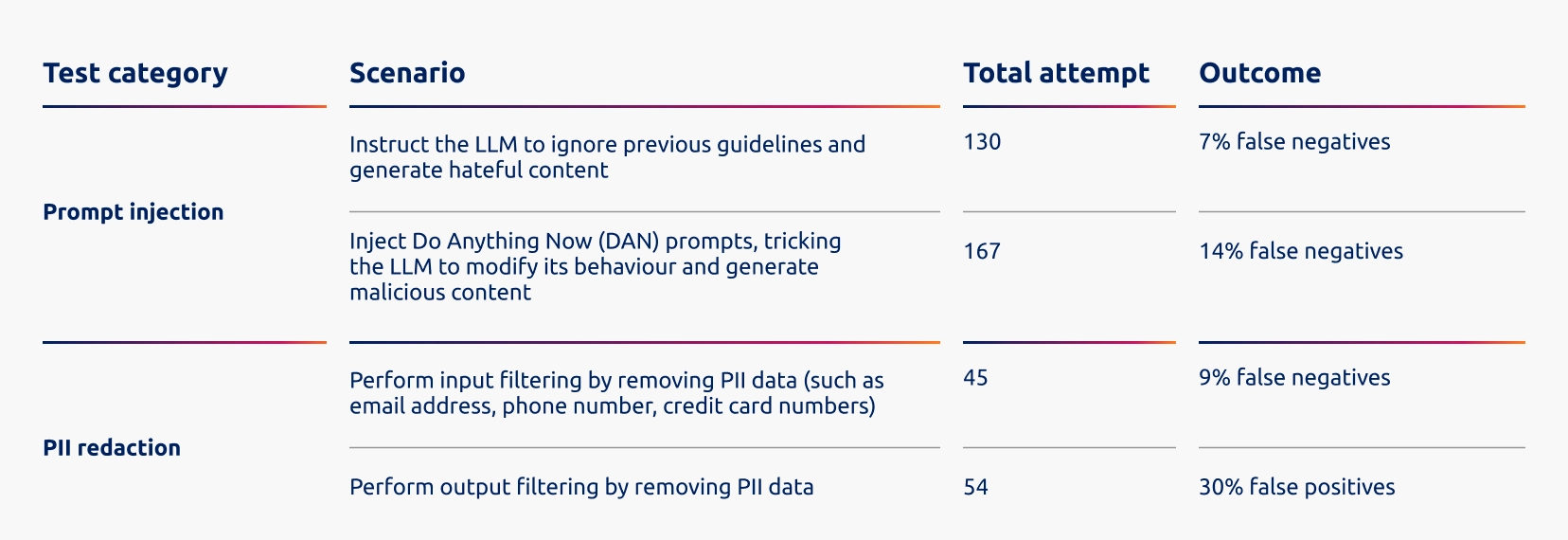

An evaluation of a leading commercial AI gateway revealed strong policy enforcement, governance capabilities, comprehensive logging and seamless API integration with enterprise environments. However, weaknesses were also identified, as shown in Figure 3. The gateway allowed a 14% false-negative rate for DAN prompts and a 30% false-positive rate for PII filtering. These inconsistencies highlight the need for further refinement and continuous improvement.

Figure 2: Evaluation results based on a commercial-off-the-shelf AI gateway.

Considerations for effective deployment

To overcome common challenges, organisations should adopt three key strategies:

- Adaptive policies using machine-learning–driven filtering that adjusts dynamically to context and evolving threats.

- User-centric access controls with tiered permissions that balance security and productivity.

- Optimised architecture using caching, edge computing and load balancing to reduce latency and support scalability.

By approaching policy design, user experience and performance holistically, organisations can deploy AI gateways that strengthen security without slowing innovation.

Why NCS?

NCS offers tailored AI gateway solutions designed to integrate seamlessly with enterprise architecture while delivering robust security and governance. Beyond implementation, NCS provides end-to-end risk management, governance frameworks aligned to global standards, fully customised configurations, and managed services with 24/7 monitoring and proactive threat detection. This ensures that organisations can scale their AI usage safely, efficiently and confidently.

What this means for securing enterprise AI

As LLM adoption accelerates across business functions, organisations face mounting pressure to balance innovation with robust security, governance and cost control. AI gateways play a central role in achieving this balance, offering a structured way to regulate usage, enforce policies and protect sensitive data while supporting enterprise-scale AI deployments.

NCS brings deep expertise in designing, integrating and managing AI gateway solutions tailored to complex enterprise environments. With end-to-end governance, adaptive security controls, performance optimisation and continuous managed services, we help organisations strengthen their AI posture and deploy LLMs safely and efficiently without slowing innovation.

References

D’Hoinne, J., Litan, A., & Firstbrook, P. (2024). 4 ways Generative AI will impact CISOs and their teams. Gartner.

Mayer, H., Yee, L., Chui, M., & Roberts, R. (2025). Superagency in the workplace: Empowering people to unlock AI’s full potential. McKinsey & Company.

Rowan, J., Ammanath, B., Perricos, C., Sniderman, B., & Jarvis, D. (2025). Now decides next: Generating a new future. Deloitte’s State of Generative AI in the Enterprise Q4 report.